| ||

| ||

| ||

| ||

| ||

|

SPG Manual

Description: OpenSS7 Online Manuals

A PDF version of this document is available here.

OpenSS7

OpenSS7 STREAMS Programmer’s Guide

About This Manual

This is Edition 7.20141001, last updated 2014-10-25, of The OpenSS7 STREAMS Programmer’s Guide, for Version 1.1 release 7.20141001 of the OpenSS7 package.

Preface

Acknowledgements

As with most open source projects, this project would not have been possible without the valiant efforts and productive software of the Free Software Foundation, the Linux Kernel Community, and the open source software movement at large.

Sponsors

Funding for completion of the OpenSS7 OpenSS7 package was provided in part by:

| • | Monavacon Limited | |

| • | OpenSS7 Corporation |

Additional funding for The OpenSS7 Project was provided by:

Contributors

The primary contributor to the OpenSS7 OpenSS7 package is Brian F. G. Bidulock. The following is a list of notable contributors to The OpenSS7 Project:

| - Per Berquist | - Kutluk Testicioglu | ||

| - John Boyd | - John Wenker | ||

| - Chuck Winters | - Angel Diaz | ||

| - Peter Courtney | - Jérémy Compostella | ||

| - Tom Chandler | - Sylvain Chouleur | ||

| - Gurol Ackman | - Christophe Nolibos | ||

| - Pierre Crepieux | - Bryan Shupe | ||

| - Christopher Lydick | - D. Milanovic | ||

| - Omer Tunali | - Tony Abo | ||

| - John Hodgkinson | - Others |

Supporters

Over the years a number of organizations have provided continued support in the form of assessment, inspection, testing, validation and certification.

Telecommunications

Aerospace and Military

Financial, Business and Security

Education, Health Care and Nuclear Power

| • | IEEE Computer Society | • | Ateb | ||

| • | ENST 4 | • | Mandexin Systems Corporation | ||

| • | HTW-Saarland 5 | ||||

| • | Kansas State University | • | Areva NP | ||

| • | University of North Carolina Charlotte | • | European Organization for Nuclear Research |

Agencies

It would be difficult for the OpenSS7 Project to attain the conformance and certifications that it has without the free availability of specifications documents and standards from standards bodies and industry associations. In particular, the following:

Of these, ICAO, ISO, IEEE and EIA have made at least some documents publicly available. ANSI is notably missing from the list: at one time draft documents were available from ANSI (ATIS), but that was curtailed some years ago. Telecordia does not release any standards publicly. Hopefully these organizations will see the light and realize, as the others have, that to remain current as a standards organization in today’s digital economy requires providing individuals with free access to documents.

Authors

The authors of the OpenSS7 package include:

| - Brian Bidulock |

Maintainer

The maintainer of the OpenSS7 package is:

| - Brian Bidulock |

Please send bug reports to bugs@openss7.org using the send-pr script included in the package, only after reading the BUGS file in the release, or See ‘Problem Reports’.

Document Information

Notice

This package is released and distributed under the GNU Affero General Public License (see GNU Affero General Public License). Please note, however, that there are different licensing terms for the manual pages and some of the documentation (derived from OpenGroup6 publications and other sources). Consult the permission notices contained in the documentation for more information.

This document, is released under the GNU Free Documentation License (see GNU Free Documentation License) with no sections invariant.

Abstract

This document provides a STREAMS Programmer’s Guide for OpenSS7.

Objective

The objective of this document is to provide a guide for the STREAMS programmer when developing STREAMS modules, drivers and application programs for OpenSS7.

This guide provides information to developers on the use of the STREAMS mechanism at user and kernel levels.

STREAMS was incorporated in UNIX System V Release 3 to augment the character input/output (I/O) mechanism and to support development of communication services.

STREAMS provides developers with integral functions, a set of utility routines, and facilities that expedite software design and implementation.

Intent

The intent of this document is to act as an introductory guide to the STREAMS programmer. It

is intended to be read alone and is not intended to replace or supplement the

OpenSS7 manual pages. For a reference for writing code, the manual pages

(see STREAMS(9)) provide a better reference to the programmer.

Although this describes the features of the OpenSS7 package,

OpenSS7 Corporation is under no obligation to provide any software,

system or feature listed herein.

Audience

This document is intended for a highly technical audience. The reader should already be familiar with Linux kernel programming, the Linux file system, character devices, driver input and output, interrupts, software interrupt handling, scheduling, process contexts, multiprocessor locks, etc.

The guide is intended for network and systems programmers, who use the STREAMS mechanism at user and kernel levels for Linux and UNIX system communication services.

Readers of the guide are expected to possess prior knowledge of the Linux and UNIX system, programming, networking, and data communication.

Revisions

Take care that you are working with a current version of this document: you will not be notified of updates. To ensure that you are working with a current version, contact the Author, or check The OpenSS7 Project website for a current version.

A current version of this document is normally distributed with the OpenSS7 package.

Version Control

$Log: SPG2.texi,v $ Revision 1.1.2.3 2011-07-27 07:52:12 brian - work to support Mageia/Mandriva compressed kernel modules and URPMI repo Revision 1.1.2.2 2011-02-07 02:21:33 brian - updated manuals Revision 1.1.2.1 2009-06-21 10:40:06 brian - added files to new distro

ISO 9000 Compliance

Only the TeX, texinfo, or roff source for this document is controlled. An opaque (printed, postscript or portable document format) version of this document is an UNCONTROLLED VERSION.

Disclaimer

OpenSS7 Corporation disclaims all warranties with regard to this documentation including all implied warranties of merchantability, fitness for a particular purpose, non-infringement, or title; that the contents of the document are suitable for any purpose, or that the implementation of such contents will not infringe on any third party patents, copyrights, trademarks or other rights. In no event shall OpenSS7 Corporation be liable for any direct, indirect, special or consequential damages or any damages whatsoever resulting from loss of use, data or profits, whether in an action of contract, negligence or other tortious action, arising out of or in connection with any use of this document or the performance or implementation of the contents thereof.

OpenSS7 Corporation reserves the right to revise this software and documentation for any reason, including but not limited to, conformity with standards promulgated by various agencies, utilization of advances in the state of the technical arts, or the reflection of changes in the design of any techniques, or procedures embodied, described, or referred to herein. OpenSS7 Corporation is under no obligation to provide any feature listed herein.

U.S. Government Restricted Rights

If you are licensing this Software on behalf of the U.S. Government ("Government"), the following provisions apply to you. If the Software is supplied by the Department of Defense ("DoD"), it is classified as "Commercial Computer Software" under paragraph 252.227-7014 of the DoD Supplement to the Federal Acquisition Regulations ("DFARS") (or any successor regulations) and the Government is acquiring only the license rights granted herein (the license rights customarily provided to non-Government users). If the Software is supplied to any unit or agency of the Government other than DoD, it is classified as "Restricted Computer Software" and the Government’s rights in the Software are defined in paragraph 52.227-19 of the Federal Acquisition Regulations ("FAR") (or any successor regulations) or, in the cases of NASA, in paragraph 18.52.227-86 of the NASA Supplement to the FAR (or any successor regulations).

Organization

This guide has several chapters, each discussing a unique topic. Introduction, Overview, Mechanism and Processing contain introductory information and can be ignored by those already familiar with STREAMS concepts and facilities.

This document is organized as follows:

- Preface

Describes the organization and purpose of the guide. It also defines an intended audience and an expected background of the users of the guide.

- Introduction

An introduction to STREAMS and the OpenSS7 package. STREAMS Fundamentals. Presents an overview and the benefits of STREAMS.

- Overview

A brief overview of STREAMS.

- Mechanism

A description of the STREAMS framework. Describes the basic operations for constructing, using, and dismantling Streams. These operations are performed using

open(2s),close(2s),read(2s),write(2s), andioctl(2s).- Processing

Processing and procedures within the STREAMS framework. Gives an overview of the STREAMS put and service routines.

- Messages

STREAMS Messages, organization, types, priority, queueing, and general handling. Discusses STREAMS messages, their structure, linkage, queueing, and interfacing with other STREAMS components.

- Polling

Polling of STREAMS file descriptors and other asynchronous application techniques. Describes how STREAMS allows user processes to monitor, control, and poll Streams to allow an effective utilization of system resources.

- Modules and Drivers

An overview of STREAMS modules, drivers and multiplexing drivers. Describes the STREAMS module and driver environment, input-output controls, routines, declarations, flush handling, driver-kernel interface, and also provides general design guidelines for modules and drivers.

- Modules

Details of STREAMS modules, including examples. Provides information on module construction and function.

- Drivers

Details of STREAMS drivers, including examples. Discusses STREAMS drivers, elements of driver flow control, flush handling, cloning, and processing.

- Multiplexing

Details of STREAMS multiplexing drivers, including examples. Describes the STREAMS multiplexing facility.

- Pipes and FIFOs

Details of STREAMS-based Pipes and FIFOs. Provides information on creating, writing, reading, and closing of STREAMS-based pipes and FIFOs and unique connections.

- Terminal Subsystem

Details of STREAMS-based Terminals and Pseudo-terminals. Discusses STREAMS-based terminal and and pseudo-terminal subsystems.

- Synchronization

Discusses STREAMS in a symmetrical multi-processor environment.

- Reference

Reference section.

- Conformance

Conformance of the OpenSS7 package to other UNIX implementations of STREAMS.

- Portability

Portability of STREAMS modules and drivers written for other UNIX implementations of STREAMS and how they can most easily be ported into OpenSS7; but, for more details on this topic, see the OpenSS7 - STREAMS Portability Guide.

- Development

Development guidelines for developing portable STREAMS modules and drivers.

- Data Structures

Primary STREAMS Data Structures, descriptions of their members, flags, constants and use. Summarizes data structures commonly used by STREAMS modules and drivers.

- Message Types

STREAMS Message Type reference, with descriptions of each message type. Describes STREAMS messages and their use.

- Utilities

STREAMS kernel-level utility functions for the module or driver writer. Describes STREAMS utility routines and their usage.

- Debugging

STREAMS debugging facilities and their use. Provides debugging aids for developers.

- Configuration

STREAMS configuration, the STREAMS Administrative Driver and the autopush facility. Describes how modules and drivers are configured into the Linux and UNIX system, tunable parameters, and the autopush facility.

- Administration

Administration of the STREAMS subsystem.

- Examples

Collected examples.

Conventions Used

This guide uses texinfo typographical conventions.

Throughout this guide, the word STREAMS will refer to the mechanism and the word Stream will refer to the path between a user application and a driver. In connection with STREAMS-based pipes Stream refers to the data transfer path in the kernel between the kernel and one or more user processes.

Examples are given to highlight the most important and common capabilities of STREAMS. They are not exhaustive and, for simplicity, often reference fictional drivers and modules. Some examples are also present in the OpenSS7 package, both for testing and example purposes.

System calls, STREAMS utility routines, header files, and data structures are given using

texinfo filename typesetting, when they are mentioned in the text.

Variable names, pointers, and parameters are given using texinfo variable

typesetting conventions. Routine, field, and structure names unique to the examples are also given

using texinfo variable typesetting conventions when they are mentioned in the text.

Declarations and short examples are in texinfo ‘sample’ typesetting.

texinfo displays are used to show program source code.

Data structure formats are also shown in texinfo displays.

Other Documentation

Although the STREAMS Programmer’s Guide for OpenSS7 provides a guide to aid in

developing STREAMS applications, readers are encouraged to consult the

OpenSS7 manual pages. For a reference for writing code, the manual pages (see

STREAMS(9)) provide a better reference to the programmer.

For detailed information on

system calls used by STREAMS (section 2), and

STREAMS utilities from section 8.

STREAMS specific input output control (ioctl) calls are provided in streamio(7).

STREAMS modules and drivers are described on section 7.

STREAMS is also described to some extent in the System V Interface Definition, Third Edition.

UNIX Edition

This system conforms to UNIX System V Release 4.2 for Linux.

Related Manuals

OpenSS7 Installation and Reference Manual

Copyright

© 1997-2014 Monavacon Limited. All Rights Reserved.

1 Introduction

1.1 Background

STREAMS is a facility first presented in a paper by Dennis M. Ritchie in 1984,7 originally implemented on 4.1BSD and later part of Bell Laboratories Eighth Edition UNIX, incorporated into UNIX System V Release 3.0 and enhanced in UNIX System V Release 4 and UNIX System V Release 4.2. STREAMS was used in SVR4 for terminal input/output, pseudo-terminals, pipes, named pipes (FIFOs), interprocess communication and networking. Since its release in System V Release 4, STREAMS has been implemented across a wide range of UNIX, UNIX-like, and UNIX-based systems, making its implementation and use an ipso facto standard.

STREAMS is a facility that allows for a reconfigurable full duplex communications path, Stream, between a user process and a driver in the kernel. Kernel protocol modules can be pushed onto and popped from the Stream between the user process and driver. The Stream can be reconfigured in this way by a user process. The user process, neighbouring protocol modules and the driver communicate with each other using a message passing scheme closely related to MOM (Message Oriented Middleware). This permits a loose coupling between protocol modules, drivers and user processes, allowing a third-party and loadable kernel module approach to be taken toward the provisioning of protocol modules on platforms supporting STREAMS.

On UNIX System V Relase 4.2, STREAMS was used for terminal input-output, pipes, FIFOs (named pipes), and network communications. Modern UNIX, UNIX-like and UNIX-based systems providing STREAMS normally support some degree of network communications using STREAMS; however, many do not support STREAMS-based pipe and FIFOs8 or terminal input-output.9.

Linux has not traditionally implemented a STREAMS subsystem. It is not clear why, however, perceived ideological differences between STREAMS and Sockets and also the XTI/TLI and Sockets interfaces to Internet Protocol services are usually at the centre of the debate. For additional details on the debate, see About This Manual in OpenSS7 Frequently Asked Questions.

Linux pipes and FIFOs are SVR3-style, and the Linux terminal subsystem is BSD-like. UNIX 98 Pseudo-Terminals, ptys, have a specialized implementation that does not follow the STREAMS framework and, therefore, do not support the pushing or popping of STREAMS modules. Internal networking implementation under Linux follows the BSD approach with a native (system call) Sockets interface only.

RedHat at one time provided an Intel Binary Compatibility Suite (iBCS) module for Linux that supported the XTI/TLI interface and socksys system calls and input-output controls, but not the STREAMS framework (and therefore cannot push or pop modules).

OpenSS7 is the current open source implementation of STREAMS for Linux and provides all of the capabilities of UNIX System V Release 4.2 MP, plus support for mainstream UNIX implementations based on UNIX System V Release 4.2 MP through compatibility modules.

Although it is intended primarily as documentation for the OpenSS7 implementation of STREAMS, much of the OpenSS7 - STREAMS Programmer’s Guide is generally applicable to all STREAMS implementations.

1.2 What is STREAMS?

STREAMS is a flexible, message oriented framework for the development of GNU/Linux communications facilities and protocols. It provide a set of system calls, kernel resources, and kernel utilities within a framework that is applicable to a wide range of communications facilities including terminal subsystems, interprocess communication, and networking. It provides standard interfaces for communication input and output within the kernel, common facilities for device drivers, and a standard interface10 between the kernel and the rest of the GNU/Linux system.

The standard interface and mechanism enable modular, portable development and easy integration of

high performance network services and their components. Because it is a message passing

architecture, STREAMS does not impose a specific network architecture (as does the BSD

Sockets kernel architecture. The STREAMS user interface is uses the familiar UNIX

character special file input and output mechanisms

open(2s),

read(2s),

write(2s),

ioctl(2s),

close(2s); and provides additional system calls,

poll(2s),

getmsg(2s),

getpmsg(2s),

putmsg(2s),

putpmsg(2s), to assist in message passing between user-level applications and

kernel-resident modules. Also, STREAMS defines a standard set of input-output controls

(ioctl(2s)) for manipulation and configuration of STREAMS by a user-space

application.

As a message passing architecture, the STREAMS interface between the user process and kernel resident modules can be treated either as fully synchronous exchanges or can be treated asynchronously for maximum performance.

1.2.1 Characteristics

STREAMS has the the following characteristics that are not exhibited (or are exhibited in different ways) by other kernel level subsystems:

- STREAMS is based on the character device special file which is one of the most flexible special files available in the GNU/Linux system.

- STREAMS is a message passing architecture, similar to Message Oriented Middleware

(MOM) that achieves a high degree of functional decoupling between modules. This allows the

service interface between modules to correspond to the natural interfaces found or described between

protocol layers in protocol stack without requiring the implementation to conform to any given

model.

As a contrasting example, the BSD Sockets implementation, internal to the kernel, provides strict socket-protocol, protocol-protocol and protocol-device function call interfaces.

- By using

putandserviceprocedures for each module, and schedulingserviceprocedures, STREAMS combines background scheduling of coroutine service procedures with message queueing and flow control to provide a mechanism robust for both event driven subsystem and soft real-time subsystem.In contrast, BSD Sockets, internal to the kernel, requires the sending component across the socket-protocol, protocol-protocol, or protocol-device to handle flow control. STREAMS integrates flow control within the STREAMS framework.

- STREAMS permits user runtime configuration of kernel data structure and modules to provide for a wide range of novel configurations and capabilities in a live GNU/Linux system. The BSD Sockets protocol framework does not provide this capability.

- STREAMS is as applicable to termination input-output and interprocess communication as

it is to networking protocols.

BSD Sockets is only applicable to a restricted range of networking protocols.

- STREAMS provides mechanisms (the pushing and popping of modules, and the linking and

unlinking of Streams under multiplexing drivers) for complex configuration of protocol stacks;

the precise topology being typically under the control of user space daemon processes.

No other kernel protocol stack framework provides this flexible capability. Under BSD Sockets it is necessary to define specialized socket types to perform these configuration functions and not in any standard way.

1.2.2 Components

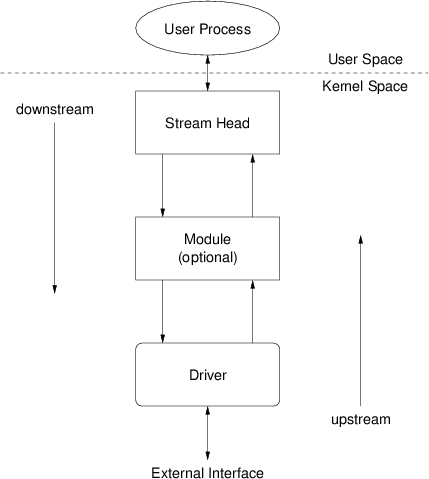

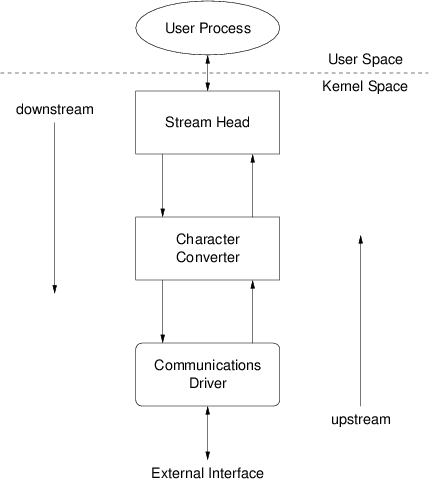

STREAMS provides a full-duplex communications path for data and control information between a kernel-resident driver and a user space process (see Figure 101).

Within the kernel, a Stream is comprised of the following basic components:

- A Stream head that is inside the Linux kernel, but which sits closest to the user space process. The Stream head is responsible for communicating with user space processes and that presents the standard STREAMS I/O interface to user space processes and applications.

- A Stream end or Driver that is inside the Linux kernel, but which sits farthest from the user space process. A Stream end or Driver that interfaces to hardware or other mechanisms within the Linux kernel.

- A Module that sits between the Stream head and Stream end. The Module provides modular and flexible processing of control and data information passed up and down the Stream.

1.2.2.1 Stream head

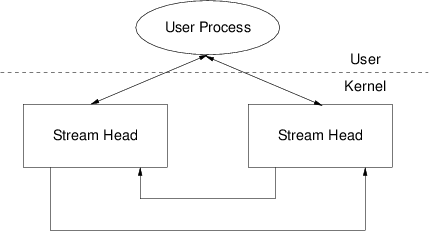

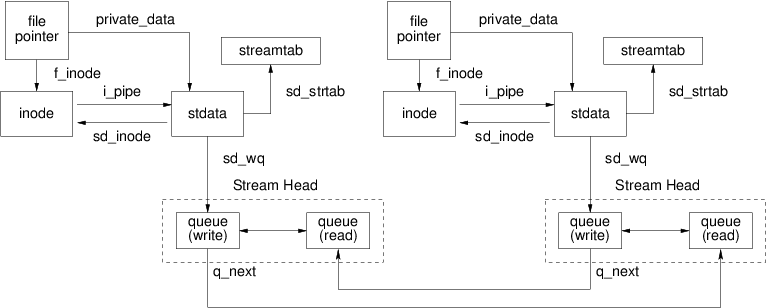

A Stream head is the component of a Stream that is closest to the user space process. The Stream head is responsible for directly communicating with the user space process in user context and for converting system calls to actions performed on the Stream head or the conversion of control and data information passed between the user space process and the Stream in response to system calls. All Streams are associate with a Stream head. In the case of STREAMS-based pipes, the Stream may be associated with two (interconnected) Stream heads. Because the Stream head follows the same structure as a Module, it can be viewed as a specialized module.

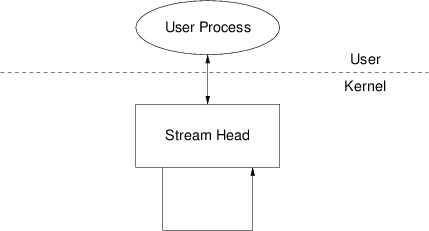

With STREAMS, pipes and FIFOs are also STREAMS-based.11 STREAMS-based pipes and FIFOs do not have a Driver component.

STREAMS-based pipes place another Stream head in the position of the Driver. That is, a STREAMS-based pipe is a full-duplex communications path between two otherwise independent Stream heads. Modules may be placed between the Stream heads in the same fashion as they can exist between a Stream head and a Driver in a normal Stream. A STREAMS-based pipe is illustrated in Figure 102.

STREAMS-based FIFOs consist of a single Stream head that has its downstream path connected to its upstream path where the Driver would be located. Modules can be pushed under this single Stream Head. A STREAMS-based FIFO is illustrated in Figure 109.

For more information on STREAMS-based pipes and FIFOs, see Pipes and FIFOs.

1.2.2.2 Module

A STREAMS Module is an optional processing element that is placed between the Stream head and the Stream end. The Module can perform processing functions on the data and control information flowing in either direction on the Stream. It can communicate with neighbouring modules, the Stream head or a Driver using STREAMS messages. Each Module is self-contained in the sense that it does not directly invoke functions provided by, nor access data structures of, neighbouring modules, but rather communicates data, status and control information using messages. This functional isolation provides a loose coupling that permits flexible recombination and reuse of Modules. A Module follows the same framework as the Stream head and Driver, has all of the same entry points and can use all of the same STREAMS and kernel utilities to perform its function.

Modules can be inserted between a Stream head and Stream end (or another

Stream head in the case of a STREAMS-based pipe or FIFO). The insertion and deletion of

Modules from a Stream is referred to as pushing and popping a Module

due to the fact that that modules are inserted or removed from just beneath the Stream head in

a push-down stack fashion. Pushing and popping of modules can be performed using standard

ioctl(2s) calls and can be performed by user space applications without any need for kernel

programming, assembly, or relinking.

For more information on STREAMS modules, see Module Component.

1.2.2.3 Driver

All Streams, with the sole exception of STREAMS-based pipe and FIFOs, contain a Driver a the Stream end. A STREAMS Driver can either be a device driver that directly or indirectly controls hardware, or can be a pseudo-device driver that interface with other software subsystems within the kernel. STREAMS drivers normally perform little processing within the STREAMS framework and typically only provide conversion between STREAMS messages and hardware or software events (e.g. interrupts) and conversion between STREAMS framework data structures and device related data structures.

For more information on STREAMS drivers, see Driver Component.

1.2.2.4 Queues

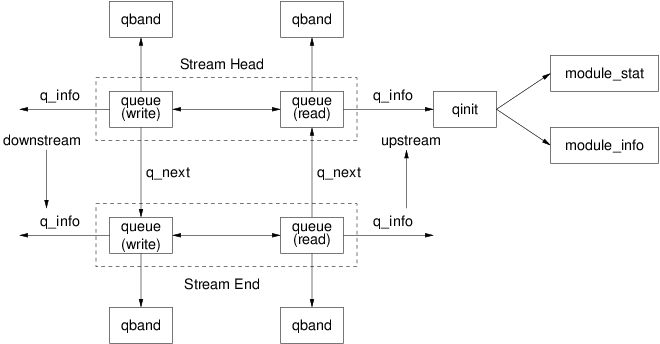

Each component in a Stream (Stream head, Module, Driver) has an associated pair of queues. One queue in each pair is responsible for managing the message flow in the downstream direction from Stream head to Stream end; the other for the upstream direction. The downstream queue is called the write-side queue in the queue pair; the upstream queue, the read-side queue.

Each queue in the pair provides pointers necessary for organizing the temporary storage and

management of STREAMS messages on the queue, as well as function pointers to procedures

to be invoked when messages are placed on the queue or need to be taken off of the

queue, and pointers to auxiliary and module-private data structures. The read-side

queue also contains function pointers to procedures used to open and close

the Stream head, Module or Driver instance associated with the queue pair.

Queue pairs are dynamically allocated when an instance of the driver, module or

Stream head is created and deallocated when the instance is destroyed.

For more information on STREAMS queues, see Queue Component.

1.2.2.5 Messages

STREAMS is a message passing architecture. STREAMS messages can contain control information or data, or both. Messages that contain control information are intended to illicit a response from a neighbouring module, Stream head or Stream end. The control information typically uses the message type to invoke a general function and the fields in the control part of the message as arguments to a call to the function. The data portion of a message represents information that is (from the perspective of the STREAMS framework) unstructured. Only cooperating modules, the Stream head or Stream end need know or agree upon the format of control or data messages.

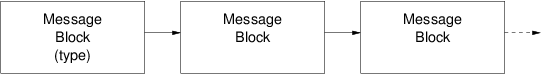

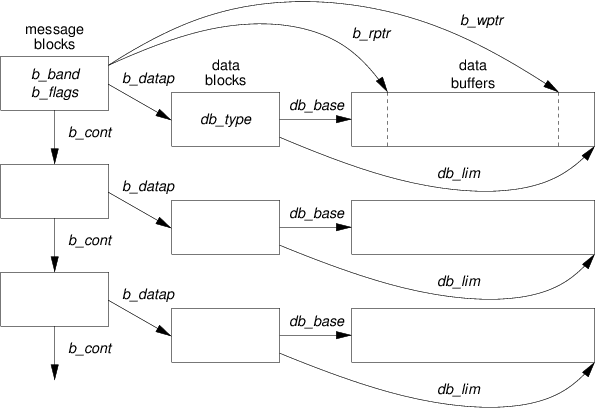

A STREAMS message consists of one or more blocks. Each block is a 3-tuple of a message block,

a data block and a data buffer. Each data block has a message type, and the data buffer contains

the control information or data associated with each block in the message. STREAMS messages

typically consist of one control-type block (M_PROTO) and zero or more data-type blocks

(M_DATA), or just a data-type block.

A set of specialized and standard message types define messages that can be sent by a module or driver to control the Stream head. A set of specialized and standard message types define messages that can be sent by the Stream head to control a module or driver, normally in response to a standard input-output control for the Stream.

STREAMS messages are passed between a module, Stream head or Driver using a

put procedure associated with the queue in the queue pair for the direction in which

the message is being passed. Messages passed toward the Stream head are passed in the

upstream direction, and those toward the Stream end, in the downstream direction.

The read-side queue in the queue pair associated with the module instance to which a message

is passed is responsible for processing or queueing upstream messages; the write-side

queue, for processing downstream messages.

STREAMS messages are generated by the Stream head and passed downstream in

response to

write(2s),

putmsg(2s), and

putpmsg(2s) system calls; they are

also consumed by the Stream head and converted to information passed to user space in response

to

read(2s),

getmsg(2s), and

getpmsg(2s) system calls.

STREAMS messages are also generated by the Driver and passed upstream to ultimately be read by the Stream head; they are also consumed when written by the Stream head and ultimately arrive at the Driver.

For more information on STREAMS messages, see Message Component.

1.3 Basic Streams Operations

This section provides a basic description of the user level interface and system calls that are used to manipulate a Stream.

A Stream is similar, and indeed is implemented, as a character device special file and is

associated with a character device within the GNU/Linux system. Each STREAMS character

device special file (character device node, see mknod(2)) has associated with it a major

and minor device number. In the usual situation, a Stream is associated with each minor

character device node in a similar fashion to a minor device instance for regular character device

drivers.

STREAMS devices are opened, as are character device drivers, with the

open(2s) system

call.12 Opening a minor device node accesses a separate Stream instance between the

user level process and the STREAMS device driver. As with normal character devices, the file

descriptor returned from the

open(2s) call, can be used to further access the Stream.

Opening a minor device node for the first time results in the creation of a new instance of a Stream between the Stream head and the driver. Subsequent opens of the same minor device node does not result in the creation of a new Stream, but provides another file descriptor that can be used to access the same Stream instance. Only the first open of a minor device node will result in the creation of a new Stream instance.

Once it has opened a Stream, the user level process can send and receive data to and from the

Stream with the usual

read(2s) and

write(2s) system calls that are

compatible with the existing character device interpretations of these system calls. STREAMS

also provides the additional system calls,

getmsg(2s) and

getpmsg(2s), to read

control and data information from the Stream, as well as

putmsg(2s) and

putpmsg(2s) to write control and data information. These additional system calls provide a

richer interface to the Stream than is provided by the traditional

read(2s) and

write(2s) calls.

A Stream is closed using the

close(2s) system call (or a call that closes file

descriptors such as

exit(2)). If a number of processes have the Stream open, only

the last

close(2s) of a Stream will result in the destruction of the Stream

instance.

1.3.1 Basic Operations Example

An basic example of opening, reading from and writing to a Stream driver is shown in Listing 1.1.

The example in Listing 1.1 is for a communications device that provide a communications channel for data transfer between two processes or hosts. Data written to the device is communicated over the channel to the remote process or host. Data read from the device was written by the remote process or host.

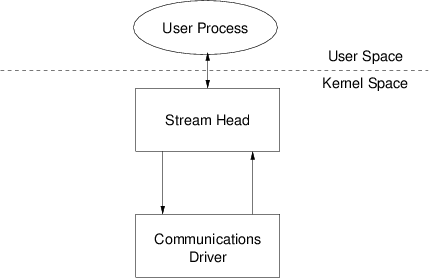

In the example in Listing 1.1, a simple Stream is opened using the

open(2s)

call. /dev/streams/comm/1 is the path to the character minor device node in the file system.

When the device is opened, the character device node is recognized as a STREAMS special file,

and the STREAMS subsystem creates a Stream (if one does not already exist for the minor

device node) an associates it with the minor device node. Figure 103 illustrates the state of the

Stream at the point after the

open(2s) call returns.

The while loop in Listing 1.1 simply reads data from the device using the

read(2s) system call and then writes the data back to the device using the

write(2s) system call.

When a Stream is opened for blocking operation (i.e., neither O_NONBLOCK nor

O_NDLEAY were set),

read(2s) will block until some data arrives. The

read(2s) call might, however, return less that the requested ‘1024’ bytes. When data

is read, the routine simply writes the data back to the device.

STREAMS implements flow control both in the upstream and downstream directions. Flow control limits the amount of normal data that can be queued awaiting processing within the Stream. High and low water marks for flow control are set on a queue pair basis. Flow control is local and specific to a given Stream. High priority control messages are not subject to STREAMS flow control.

When a Stream is opened for blocking operation (i.e., neither O_NONBLOCK nor

O_NDLEAY were set),

write(2s) will block while waiting for flow control to

subside.

write(2s) will always block awaiting the availability of STREAMS message

blocks to satisfy the call, regardless of the setting of O_NONBLOCK or

O_NDELAY.

In the example in Listing 1.1, the

exit(2) system call is used to exit the program;

however, the

exit(2) results in the equivalent of a call to

close(2s) for all

open file descriptors and the Stream is flushed and destroyed before the program is finally

exited.

1.4 Components

This section briefly describes each STREAMS component and how they interact within a Stream. Chapters later in this manual describe the components and their interaction in greater detail.

1.4.1 Queues

This subsection provides a brief overview of message queues and their associated procedures.

A queue provides an interface between an instance of a STREAMS driver, module or Stream head, and the other modules and drivers that make up a Stream for a direction of message flow (i.e., upstream or downstream). When an instance of a STREAMS driver, module or Stream head is associated with a Stream, a pair of queues are allocated to represent the driver, module or Stream head within the Stream. Queue data structures are always allocated in pairs. The first queue in the pair is the read-side or upstream queue in the pair; the second queue, the write-side or downstream queue.

Queues are described in greater detail in Queues and Priority.

1.4.1.1 Queue Procedures

This subsection provides a brief overview of queue procedures.

The STREAMS module, driver or Stream head provides five procedures that are associated

with each queue in a queue pair: the put, service, open,

close and admin procedures. Normally the open and close

procedures (and possibly the optional admin procedure) are only associated with the

read-side of the queue pair.

Each queue in the pair has a pointer to a put procedure. The put procedure is

used by STREAMS to present a new message to an upstream or downstream queue. At the ends of

the Stream, the Stream head write-side, or Stream end read-side, queue

put procedure is normally invoked using the put(9s) utility. A module within the

Stream typically has its put procedure invoked by an adjacent module, driver or

Stream head that uses the putnext(9) utility from its own put or

service procedure to pass message to adjacent modules. The put procedure of the

queue receiving the message is invoked. The put procedure decides whether to process the

message immediately, queue the message on the message queue for later processing by the queue’s

service procedure, or whether to pass the message to a subsequent queue using

putnext(9).

Each queue in the pair has a pointer to an optional service procedure. The purpose of a

service procedure process messages that were deferred by the put procedure by

being placed on the message queue with utilities such as putq(9). A service

procedure typically loops through taking messages off of the queue and processing them. The

procedure normally terminates the loop when it can not process the current message (in which case it

places the message back on the queue with putbq(9)), or when there is no longer any

messages left on the queue to process. A service procedure is optional in the sense that

if the put procedure never places any messages on the queue, a service procedure

is unnecessary.

Each queue in the pair also has a pointer to a open and close procedure;

however, the qi_qopen and qi_qclose pointers are only significant in the read-side

queue of the queue pair.

The queue open procedure for a driver is called each time that a driver (or Stream

head) is opened, including the first open that creates a Stream and upon each successive open

of the Stream. The queue open procedure for a module is called when the module is

first pushed onto (inserted into) a Stream, and for each successive open of a Stream

upon which the module has already been pushed (inserted).

The queue close procedure for a module is called whenever the module is popped (removed)

from a Stream. Modules are automatically popped from a Stream on the last close of the

Stream. The queue close procedure for a driver is called with the last close of the

Stream or when the last reference to the Stream is relinquished. If the Stream is

linked under a multiplexing driver (I_LINK(7) (see streamio(7))), or has been named with fattach(3),

then the Stream will not be dismantled on the last close and the close procedure not

called until the Stream is eventually unlinked (I_UNLINK(7) (see streamio(7))) or detached

(fdetach(3)).

Procedures are described in greater detail in Procedures.

1.4.2 Messages

This subsection provides a brief overview of STREAMS messages.

In fitting with the concept of function decoupling, all control and data information is passed

between STREAMS modules, drivers and the Stream head using messages. Utilities are

provided to the STREAMS module writer for passing messages using queue and message pointers.

STREAMS messages consist of a 3-tuple of a message block structure (msgb(9)), a data

block structure (datab(9)) and a data buffer. The message block structure is used to

provide an instance of a reference to a data block and pointers into the data buffer. The data

block structure is used to provide information about the data buffer, such as message type, separate

from the data contained in the buffer. Messages are normally passed between STREAMS modules,

drivers and the Stream head using utilities that invoke the target module’s put

procedure, such as put(9s), putnext(9), qreply(9). Messages travel along

a Stream with successive invocations of each driver, module and Stream head’s

put procedure.

Messages are described in greater detail in Messages Overview and Messages.

1.4.2.1 Message Types

This subsection provides a brief overview of STREAMS message types.

Each data block (datab(9)) is assigned a message type. The message type discriminates the

use of the message by drivers, modules and the Stream head. Most of the message types may be

assigned by a module or driver when it generates a message, and the message type can be modified as

a part of message processing. The Stream head uses a wider set of message types to perform

its function of converting the functional interface to the user process into the messaging interface

used by STREAMS modules and drivers.

Most of the defined message types (see Message Type Overview, and Message Types) are

solely for use within the STREAMS framework. A more limited set of message types

(M_PROTO, M_PCPROTO and M_DATA) can be used to pass control and data information

to and from the user process via the Stream head. These message type can be generated and

consumed using the read(2s), write(2s), getmsg(2s), getpmsg(2s),

putmsg(2s), putpmsg(2s) system calls and some streamio(7) STREAMS

ioctl(2s).

Message types are described in detail in Message Type Overview and Message Types.

1.4.2.2 Message Linkage

Messages blocks of differing types can be linked together into composite messages as illustrated in Figure 104.

Messages, once allocated, or when removed from a queue, exist standalone (i.e., they are not

attached to any queue). Messages normally exist standalone when they have been first allocated by

an interrupt service routine, or by the Stream head. They are placed into the Stream by

the driver or Stream head at the Stream end by calling put(9s). After being

inserted into a Stream, message normally only exist standalone in a given queue’s

put or service procedures. A queue’s put or service

procedure normally do one of the following:

- pass the message along to an adjacent queue with

putnext(9)orqreply(9); - process and consume the message by deallocating it with

freemsg(9); - place the message on the queue from the

putprocedure withputq(9)or from theserviceprocedure usingputbq(9).

Once placed on a queue, a message exists only on that queue and all other references to the message are dropped.

Only one reference to a message block (msgb(9)) exists within the STREAMS framework.

Additional references to the same data block (datab(9)) and data buffer can be established

by duplicating the messages block,

msgb(9) (without duplicating either the data

block,(datab(9), or data buffer). The STREAMS dupb(9) and

dupmsg(9) utilities can be used to duplicate message blocks. Also, the entire 3-tuple of

message block, data block and data buffer can be copied using the copyb(9) and

copymsg(9) STREAMS utilities.

When a message is first allocated, it is the responsibility of the allocating procedure to either

pass the message to a queue put procedure, place the message on its own message queue, or

free the message. When a message is removed from a message queue, the reference then becomes the

responsibility of the procedure that removed it from the queue. Under special circumstances, it

might be necessary to temporarily store a reference to a standalone message in a module private

data structure, however, this is usually not necessary.

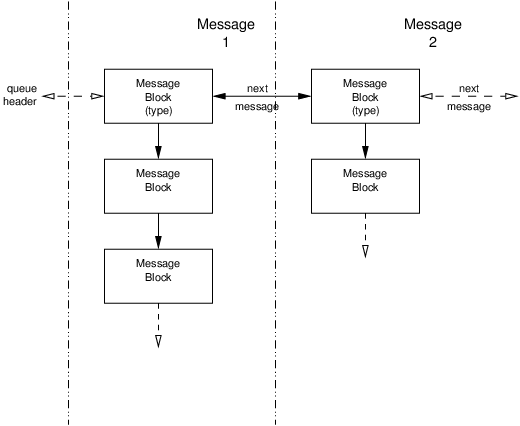

When a message has been placed on a queue, it is linked into the list of messages already on the

queue. Messages that exist on a message queue await processing by the queue’s service

procedure. Essentially, queue put procedures are a way of performing immediate message

processing, and placing a message on a message queue for later processing by the queue’s

service procedure is a way of deferring message processing until a later time: that is,

until STREAMS schedules the service procedure for execution.

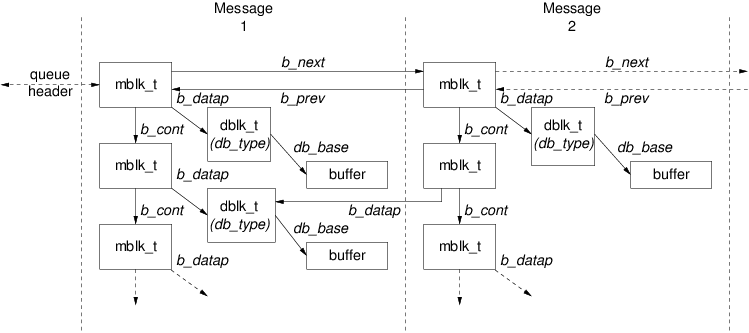

Two messages linked together on a message queue is illustrated in Figure 105. In the figure, ‘Message 2’ is linked to ‘Message 1’.

As illustrated in Figure 105, when a message exists on a message queue, the first message block in

the message (which can possibly contain a chain of message blocks) is linked into a double linked

list used by the message queue to order and track messages. The queue structure, queue(9),

contains the head and tail pointers for the linked list of messages that reside on the queue. Some

of the fields in the first message block (such as the linked list pointers) are significant only in

the first message block of the message and applies to all the message blocks in the message (such as

message band).

Message linkage is described in detail in Message Structure.

1.4.2.3 Message Queueing Priority

This subsection provides a brief overview of message queueing priority.

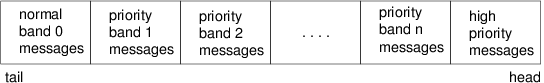

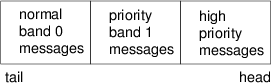

STREAMS message queues provide the ability to process messages of differing priority. There are three classes of message priority (in order of increasing priority):

- Normal messages.

- Priority messages.

- High-priority messages.

Normal messages are queued in priority band ‘0’. Priority messages are queued in bands greater than zero (‘1’ through ‘255’ inclusive). Messages of a higher ordinal band number are of greater priority. For example, a priority message for band ‘23’ is queued ahead of messages for band ‘22’. Normal and priority messages are subject to flow control within a Stream, and a queued according to priority.

High priority messages are assigned a priority band of ‘0’; however, their message type distinguishes them as high priority messages and they are queued ahead of all other messages. (The priority band for high priority messages is ignored and always set to ‘0’ whenever a high priority message type is queued.) High priority messages are given special treatment within the Stream and are not subjected to flow control; however, only one high priority message can be outstanding for a given transaction or operation within a Stream. The Stream head will discard high priority messages that arrive before a previous high priority message has been acted upon.

Because queue service procedures process messages in the order in which they appear in the

queue, messages that are queued toward the head of the queue yield a higher scheduling priority than

those toward the tail. High priority messages are queue first, followed by priority messages of

descending band numbers, finally followed by normal (band ‘0’) messages.

STREAMS provides independent flow control parameters for ordinary messages. Normal message flow

control parameters are contained in the queue structure itself (queue(9)); priority

parameters, in the auxiliary queue band structure (qband(9)). A set of flow control

parameters exists for each band (from ‘0’ to ‘255’).

As a high priority message is defined by message type, some message types are available in

high-priority/ordinary pairs (e.g., M_PCPROTO/M_PROTO) that perform the same function

but which have differing priority.

Queueing priority is described in greater detail in Queues and Priority.

1.4.3 Modules

This subsection provides a brief overview of STREAMS modules.

Modules are components of message processing that exist as a unit within a Stream beneath the Stream head. Modules are optional components and zero or more (up to a predefined limit) instances of a module can exist within a given Stream. Instances of a module have a unique queue pair associated with them that permit the instance to be linked among the other queue pairs in a Stream.

Figure 48 illustrates and instance each of two modules (‘A’ and ‘B’) that are linked within the same Stream. Each module instance consists of a queue pair (‘Ad/Au’ and ‘Bd/Bu’ in the figure). Messages flow from the driver to the Stream head through the upstream queues in each queue pair (‘Au’ and then ‘Bu’ in the figure); and from Stream head to driver through downstream queues (‘Bd’ and then ‘Ad’).

The module provides unique message processing procedures (put and optionally

service procedures) for each queue in the queue pair. One set of put and

service procedures handles upstream messages; the other set, downstream

messages. Each procedure is independent of the others. STREAMS handles the passing of

messages but any other information that is to be passed between procedures must be performed

explicitly by the procedures themselves. Each queue provides a module private pointer that can be

used by procedures for maintaining state information or passing other information between

procedures.

Each procedure can pass messages directly to the adjacent queue in either direction of message flow.

This is normally performed with the STREAMS putnext(9) utility. For example, in

Figure 48, procedures associated with queue ‘Bd’ can pass messages to queue ‘Ad’;

‘Bu’ to ‘Au’.

Also, procedures can easily locate the other queue in a queue pair and pass messages along the

opposite direction of flow. This is normally performed using the STREAMS qreply(9)

utility. For example, in Figure 48, procedures associated with queue ‘Ad’ can easily locate

queue ‘Au’ and pass messages to ‘Bu’ using

qreply(9).

Each queue in a module is associated with messages, processing procedures, and module private data. Typically, each queue in the module has a distinct set of message, processing procedures and module private data.

- Messages

-

Messages can be inserted into, and removed from, the linked list message queue associated with each queue in the queue pair as they pass through the module. For example, in Figure 48, ‘Message Ad’ exists on the ‘Ad’ queue; ‘Message Bu’, on the ‘Bu’ queue.

- Processing Procedures

-

Each queue in a module queue pair requires that a

putprocedure be defined for the queue. Upstream or downstream modules, drivers or the Stream head invoke aputprocedure of the module when they pass messages to the module along the Stream.Each queue may optionally provide a

serviceprocedure that will be invoked when messages are placed on the queue for later processing by theserviceprocedure. Aserviceprocedure is never required if the moduleputprocedure never enqueues a message to either queue in the queue pair.Either procedure in either queue in the pair can pass messages upstream or downstream and may alter information within the module private data associated with either queue in the pair.

- Data

-

Module processing procedures can make use of a pointer in each queue structure that is reserved for use by the module writer to locate module private data structures. These data structures are typically attached to each queue from the module’s

openprocedure, and detached from then module’scloseprocedure. Module private data is useful for maintaining state information associated with the instance of the module and for passing information between procedures.

Modules are described in greater detail in Modules.

1.4.4 Drivers

This subsection provides a brief overview of STREAMS drivers.

The Device component of the Stream is an initial part of the regular Stream (positioned just below the Stream head). Most Streams start out life as a Stream head connected to a driver. The driver is positioned within the Stream at the Stream end. Note that not all Streams require the presence of a driver: a STREAMS-based pipe or FIFO Stream do not contain a driver component.

A driver instance represented by a queue pair within the Stream, just as for modules. Also, each queue in the queue pair has a message queue, processing procedures, and private data associated with it in the same way as for STREAMS modules. There are three differences that distinguish drivers from modules:

- Drivers are responsible for generating and consuming messages at the Stream end.

Drivers convert STREAMS messages into appropriate software or hardware actions, events and data transfer. As a result, drivers that are associated with a hardware device normally contain an interrupt service procedure that handles the external device specific actions, events and data transfer. Messages are typically consumed at the Stream end in the driver’s downstream

putorserviceprocedure and action take or data transferred to the hardware device. Messages are typically generated at the Stream end in the driver’s interrupt service procedure, and inserted upstream using theput(9s)STREAMS utility.Software drivers (so-called pseudo-device drivers) are similar to a hardware device driver with the exception that they typically do not contain an interrupt service routine. Pseudo-device drivers are still responsible for consuming messages at the Stream end and converting them into actions and data output (external to STREAMS), as well as generating messages in response to events and data input (external to STREAMS).

In contrast, modules are intended to operate solely within the STREAMS framework.

- Because a driver sits at a Stream end and can support multiplexing, a driver can have

multiple Streams connected to it, either upstream (fan-in) or downstream (fan-out)

(see Multiplexing of Streams).

In contrast, an instance of a module is only connected within a single Stream and does not support multiplexing at the module queue pair.

- An instance of a driver (queue pair) is created and destroyed using the

open(2s)andclose(2s)system calls.In contrast, an instance of a module (queue pair) is created and destroyed using the

I_PUSHandI_POPSTREAMSioctl(2s)commands.

Aside from these differences, the STREAMS driver is similar in most respects to the STREAMS module. Both drivers and modules can pass signals, error codes, return values, and other information to processes in adjacent queue pairs using STREAMS messages of various message types provided for that purpose.

Drivers are described in greater detail in Drivers.

1.4.5 Stream Head

This subsection provide a brief overview of Stream heads.

The Stream head is the first component of a Stream that is allocated when a Stream is created. All Streams have an associated Stream head.

In the case of STREAMS-based pipes, two Stream heads are associated with each other. STREAMS-based FIFOs have one Stream head but no Stream end or Driver. For all other Streams, as illustrated in Figure 48, there exists a Stream head and a Stream end or Driver.

The Stream head has a queue pair associated with them, just as does any other STREAMS module or driver. Also, just as any other module, the Stream head provides the processing procedures and private data for processing of messages passed to queues in the pair.

The differences is that the processing procedures are provided by the GNU/Linux system rather than being written by the module or driver writer. These system provided processing procedures perform the necessary functions to convert generate to and consume messages from the Stream in response to system calls invoked by a user process. Also, a set of specialized behaviours are provided and a set of specialized message types that may be exchanged with modules and drivers in the Stream to provide the standard interface expected by the user application.

Stream heads are described in greater detail in Mechanism, Polling, Pipes and FIFOs, and Terminal Subsystem.

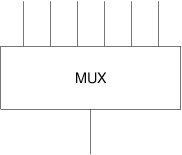

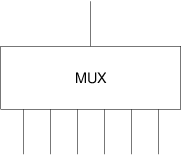

1.5 Multiplexing

This subsection provides a brief overview of Stream Multiplexing.

Basic Streams that can be created with the

open(2s) or

pipe(2s) system calls

are linear arrangements from Stream head to Driver or Stream head to Stream

head. Although these linear arrangements satisfy the needs of a large class of STREAMS

applications, there exits a class of application that are more naturally represented by

multiplexing: that is, an arrangements where one or more upper Streams feed into one or more

lower Streams. Network protocol stacks (a significant application are for STREAMS) are

typically more easily represented by multiplexed arrangements.

A fan-in multiplexing arrangement is one in which multiple upper Streams feed into a single lower Stream in a many-to-one relationship as illustrated in Figure 49.

A fan-out multiplexing arrangement is one in which a single upper Stream feeds into multiple lower Streams in a one-to-many relationship as illustrated in Figure 50. (This is the more typically arrangement for communications protocol stacks.)

A fan-in/fan-out multiplexing arrangement is one in which multiple upper Streams feed into multiple lower Streams in a many-to-many relationship as illustrated in Figure 51.

To support these arrangements, STREAMS provide a mechanism that can be used to assemble multiplexing arrangements in a flexible way. An, otherwise normal, STREAMS pseudo-device driver can be specified to be a multiplexing driver.

Conceptually, a multiplexing driver can perform upper multiplexing between multiple Streams on its upper side connecting the user process and the multiplexing driver, and lower multiplexing between multiple Streams on its lower side connecting the multiplexing driver and the device driver.

As with normal STREAMS drivers, multiplexing drivers can have multiple Streams

created on its upper side using the

open(2s) system call. Unlike regular

STREAMS drivers, however, multiplexing drivers have the additional capability that other

Streams can be linked to the lower side of the driver. The linkage is performed by

issuing specialized

streamio(7) commands to to the driver that are recognized by

multiplexing drivers (I_LINK, I_PLINK, I_UNLINK,

I_PUNLINK).

Any Stream can be linked under a multiplexing driver (provided that it is not already linked under another multiplexing driver). This includes an upper Stream of a multiplexing driver. In this fashion, complex trees of multiplexing drivers and linear Stream segments containing pushed modules can be assembled. Using these linkage commands, complex arrangements can be assembled, manipulated and dismantled by a user or daemon process to suit application needs.

The fan-in arrangement of Figure 49 performs upper multiplexing; the fan-out arrangement of Figure 50, lower multiplexing; and the fan-in/fan-out arrangement of Figure 51, both upper and lower multiplexing.

1.5.1 Fan-Out Multiplexers

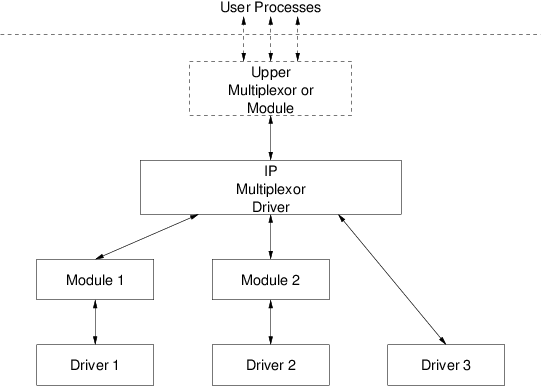

Figure 47 illustrates an example, closely related to the fan-out arrangement of Figure 50, where the Internet Protocol (IP) within a networking stack is implemented as a multiplexing driver and independent Streams to three specific device drivers are linked beneath the IP multiplexing driver.

The IP multiplexing driver is capable of routing messages to the lower Streams on the basis of address and the subnet membership of each device driver. Messages received from the lower Streams can be discriminated an sent to the appropriate user process upper Stream (e.g. on the basis of, say, protocol Id). Each lower Stream, ‘Module 1’, ‘Module 2’, ‘Driver 3’, presents the same service interface to the IP multiplexing driver, regardless of the specific hardware or lower level communications protocol supported by the driver. For example, the lower Streams could all support the Data Link Provider Interface (DLPI).

As depicted in Figure 47, the IP multiplexing driver could have additional multiplexing drivers or modules above it. Also, ‘Driver 1’, ‘Driver 2’ or ‘Driver 3’ could themselves be multiplexing drivers (or replaced by multiplexing drivers). In general, multiplexing drivers are independent in the sense that it is not necessary that a given multiplexing driver be aware of other multiplexing drivers upstream of its upper Stream, nor downstream of its lower Streams.

1.5.2 Fan-In Multiplexers

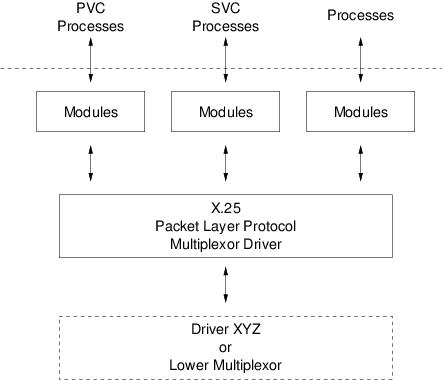

Figure 52 illustrates an example, more closely related to the fan-in arrangement of Figure 49, where an X.25 Packet Layer Protocol multiplexing driver is used to switch messages between upper Streams supporting Permanent Virtual Circuits (PVCs) or Switch Virtual Circuits (SVCs) and (possibly) a single lower Stream.

The ability to multiplex upper Streams to a driver is a characteristic supported by all

STREAMS drivers: not just multiplexing drivers. Each

open(2s) to a minor

device node results in another upper Stream that can be associated with the device driver.

What the multiplexing driver permits over the normal STREAMS driver is the ability to

link one or more lower Streams (possibly containing modules and another multiplexing driver)

beneath it.

1.5.3 Complex Multiplexers

When constructing multiplexers for applications, even more complicated arrangements are possible. Multiplexing over multiple Streams on both the upper and lower side of a multiplexing driver is possible. Also, a driver the provides lower multiplexing can be linked beneath a driver that provide upper multiplexing as depicted by the dashed box in Figure 52. Each multiplexing driver can perform upper multiplexing, lower multiplexing, or both, providing a flexibility for the designer.

STREAMS provides multiplexing as a general purpose facility that is flexible in that multiplexing drivers can be stacked and linked in a wide array of complex configurations. STREAMS imposes few restrictions on processing within the multiplexing driver making the mechanism applicable to a many classes of applications.

Multiplexing is described in greater detail in Multiplexing.

1.6 Benefits of STREAMS

STREAMS provides a flexible, scalable, portable, and reusable kernel and user level facility for the development of GNU/Linux system communications services. STREAMS allows the creation of kernel resident modules that offer standard message passing facilities and the ability for user level processes to manipulate and configure those modules into complex topologies. STREAMS offers a standard way for user level processes to select and interconnect STREAMS modules and drivers in a wide array of combinations without the need to alter Linux kernel code, recompile or relink the kernel.

STREAMS also assists in simplifying the user interface to device drivers and protocol stacks by providing powerful system calls for the passing of control information from user to driver. With STREAMS it is possible to directly implement asynchronous primitive-based service interfaces to protocol modules.

1.6.1 Standardized Service Interfaces

Many modern communications protocols define a service primitive interface between a service user and a service provider. Examples include the ISO Open Systems Interconnect (OSI) and protocols based on OSI such as Signalling System Number 7 (SS7). Protocols based on OSI can be directly implemented using STREAMS.

In contrast to other approaches, such as BSD Sockets, STREAMS does not impose a structured function call interface on the interaction between a user level process or kernel resident protocol module. Instead, STREAMS permits the service interface between a service user and service provider (whether the service user is a user level process or kernel resident STREAMS module) to be defined in terms of STREAMS messages that represent standardized service primitives across the interface.

A service interface is defined13 at the boundary between neighbouring modules. The upper module at the boundary is termed the service user and the lower module at the boundary is termed the service provider. Implemented under STREAMS, a service interface is a specified set of messages and the rules that allow passage of these messages across the boundary. A STREAMS module or driver that implements a service interface will exchange messages within the defined set across the boundary and will respond to received messages in accordance with the actions defined for the specific message and the sequence of messages preceding receipt of the message (i.e., in accordance with the state of the module).

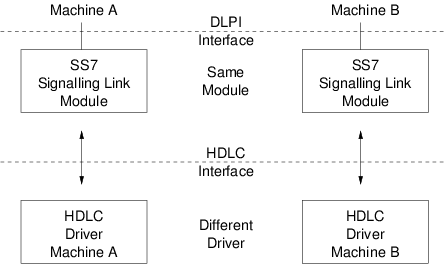

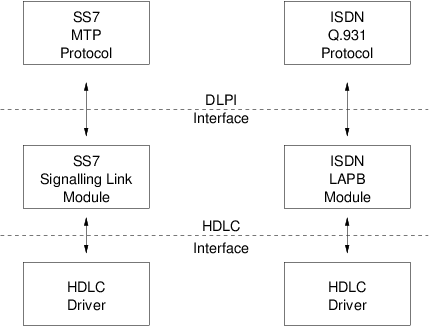

Instances of protocol stacks are formed using STREAMS facilities for pushing modules and linking multiplexers. For proper and consistent operation, protocol stacks are assembled so that each neighbouring module, driver and multiplexer implement the same service interface. For example, a module that implements the SS7 MTP protocol layer, as shown in Figure 53, presents a protocol service interface at it input and output sides. Other modules, drivers and multiplexers should only be connected at the input and output sides of the SS7 MTP protocol module if they provide the same interface in the symmetric role (i.e., user or provider).

It is the ability of STREAMS to implement service primitive interfaces between protocol modules that makes it most appropriate for implementation of protocols based on the OSI service primitive interface such as X.25, Integrated Services Digital Network (ISDN), Signalling System No. 7 (SS7).

1.6.2 Manipulating Modules

STREAMS provides the ability to manipulate the configuration of drivers, modules and multiplexers from user space, easing configuration of protocol stacks and profiles. Modules, drivers and multiplexers implementing common service interfaces can be substituted with ease. User level processes may access the protocol stack at various levels using the same set of standard system calls, while also permitting the service interface to the user process to match that of the topmost module.

It is this flexibility that makes STREAMS well suited to the implementation of communications protocols based on the OSI service primitive interface model. Additional benefits for communications protocols include:

- User level programs use a service interface that is independent of underlying protocols, drivers, device implementation, and physical communications media.

- Communications architecture and upper layer protocols can be independent of underlying protocol, drivers, device implementation, and physical communications media.

- Communications protocol profiles can be created by selecting and connection constituent lower layer protocols and services.

The benefits of the STREAMS approach are protocol portability, protocol substitution, protocol migration, and module reuse. Examples provided in the sections that follow are real-world examples taken from the open source Signalling System No. 7 (SS7) stack implemented by the OpenSS7 Project.

1.6.2.1 Protocol Portability

Figure 53, shows how the same SS7 Signalling Link protocol module can be used with different drivers on different machines by implementing compatible service interfaces. The SS7 Signalling Link are the Data Link Provider Interface (DLPI) and the Communications Device Interface (CDI) for High-Level Data Link Control (HDLC).

By using standard STREAMS mechanisms for the implementation of the SS7 Signalling Link module, only the driver needs to be ported to port an entire protocol stack from one machine to another. The same SS7 Signalling Link module (and upper layer modules) can be used on both machines.

Because the Driver presents a standardized service interface using STREAMS, porting a driver from the machine architecture of ‘Machine A’ to that of ‘Machine B’ consists of changes internal to the driver and external to the STREAMS environment. Machine dependent issues, such as bus architectures and interrupt handling are kept independent of the primary state machine and service interface. Porting a driver from one major UNIX or UNIX-like operating system and machine architecture supporting STREAMS to another is a straightforward task.

With OpenSS7, STREAMS provides the ability to directly port a large body of existing STREAMS modules to the GNU/Linux operating system.

1.6.2.2 Protocol Substitution

STREAMS permits the easy substitution of protocol modules (or device drivers) within a protocol stack providing a new protocol profile. When protocol modules are implemented to a compatible service interface the can be recombined and substituted, providing a flexible protocol architecture. In some circumstances, and through proper design, protocol modules can be substituted that implement the same service interface, even if they were not originally intended to be combined in such a fashion.

Figure 300 illustrates how STREAMS can substitute upper layer protocol modules to implement a different protocol stack over the same HDLC driver. As each module and driver support the same service interface at each level, it is conceivable that the resulting modules could be recombined to support, for example, SS7 MTP over an ISDN LAPB channel.14

Another example would be substituting an M2PA signalling link module for a traditional SS7 Signalling Link Module to provide SS7 over IP.

1.6.2.3 Protocol Migration

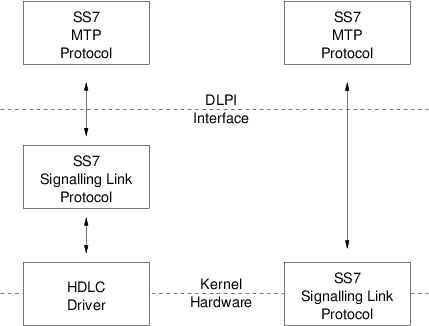

Figure 54 illustrates how STREAMS can move functions between kernel software and front end firmware. A common downstream service interface allows the transport protocol module to be independent of the number or type of modules below. The same transport module will connect without modification to either an SS7 Signalling Link module or SS7 Signalling Link driver that presents the same service interface.

The OpenSS7 SS7 Stack uses this capability also to adapt the protocol stack to front-end hardware that supports differing degrees of SS7 Signalling Link support in firmware. Hardware cards that support as much as a transparent bit stream can have SS7 Signalling Data Link, SS7 Signalling Data Terminal and SS7 Signalling Link modules pushed to provide a complete SS7 Signalling Link that might, on another hardware card, be mostly implemented in firmware.

By shifting functions between software and firmware, developers can produce cost effective, functionally equivalent systems over a wide range of configurations. They can rapidly incorporate technological advances. The same upper layer protocol module can be used on a lower capacity machine, where economics may preclude the use of front-end hardware, and also on a larger scale system where a front-end is economically justified.

1.6.2.4 Module Reusability

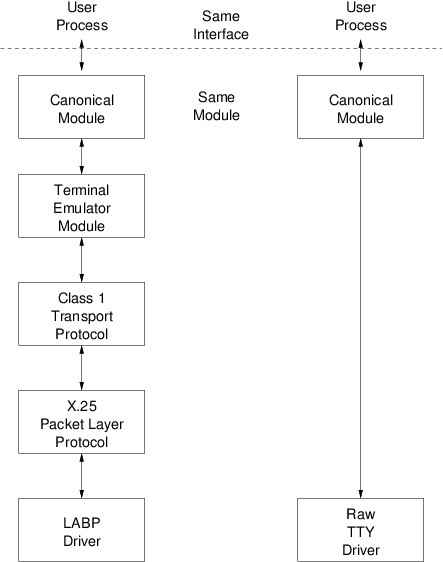

Figure 55 shows the same canonical module (for example, one that provides delete and kill processing on character strings) reused in two different Streams. This module would typically be implemented as a filter, with no downstream service interface. In both cases, a tty interface is presented to the Stream’s user process since the module is nearest the Stream head.

2 Overview

2.1 Definitions

2.2 Concepts

2.3 Application Interface

2.4 Kernel Level Facilities

2.5 Subsystems

3 Mechanism

This chapter describes how applications programs create and interact with a Stream using traditional and standardized STREAMS system calls. General system call and STREAMS-specific system calls provide the interface required by user level processes when implementing user level applications programs.

3.1 Mechanism Overview

The system call interface provided by STREAMS is upward compatible with the traditional character device system calls.

STREAMS devices appears as character device nodes within the file system in the

GNU/Linux system.

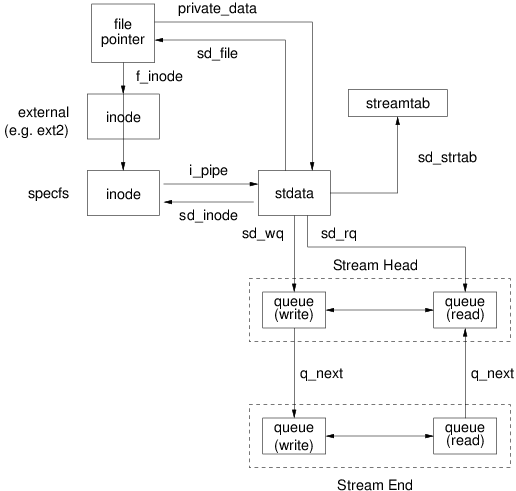

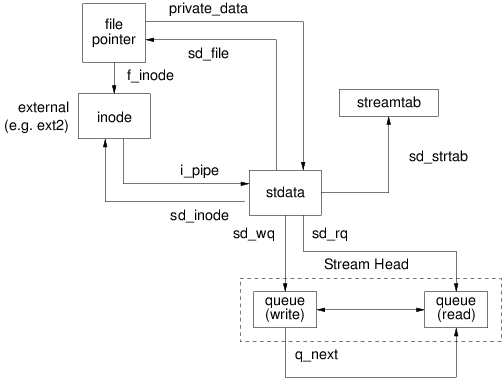

The open(2s) system call recognizes that a character special file is a STREAMS device,

creates a Stream and associates it with a device in the same fashion as a character device.

Once open, a user process can send and receive data to and from the STREAMS special file using

the traditional write(2s) and read(2s) system calls in the same manner as is

performed on a traditional character device special file.

Character device input-output controls using the ioctl(2s) system call can also be performed

on a STREAMS special file. STREAMS defines a set of standard input-output control

commands (see ioctl(2p) and streamio(7)) specific to STREAMS special files.

Input-output controls that a defined for a specific device are also supported as they are for

character device drivers.

With support for these general character device input and output system calls, it is possible to implement a STREAMS device driver in such a way that an application is unaware that it has opened and is controlling a STREAMS device driver: the application could treat the device in the identical manner to a character device. This make it possible to convert an existing character device driver to STREAMS and make possible the portability, migration, substitution and reuse benefits of the STREAMS framework.

STREAMS provides STREAMS-specific system calls and

ioctl(2s) commands, in

addition to support for the traditional character device I/O system calls and

ioctl(2s)

commands.

The poll(2s) system call15 provides the ability for the

application to poll multiple Streams for a wide range of events.

The putmsg(2s) and putpmsg(2s) system calls provide the ability for applications

programs to transfer both control and data information to the Stream. The

write(2s)

system call only supports the transfer of data to the Stream, whereas,

putmsg(2s) and

putpmsg(2s) permit the transfer of prioritized control information in addition to data.

The getmsg(2s) and getpmsg(2s) system calls provide the ability for applications

programs to receive both control and data information from the Stream. The

read(2s)

system call can only support the transfer of data (and in some cases the inline control

information), whereas,

getmsg(2s) and

getpmsg(2s) permit the transfer of

prioritized control information in addition to data.

Implementation of standardized service primitive interfaces is enabled through the use of the

putmsg(2s),

putpmsg(2s),

getmsg(2s) and

getpmsg(2s) system

calls.

STREAMS also provides kernel level utilities and facilities for the development of kernel resident STREAMS modules and drivers. Within the STREAMS framework, the Stream head is responsible for conversion between STREAMS messages passed up and down a Stream and the system call interface presented to user level applications programs. The Stream head is common to all STREAMS special files and the conversion between the system call interface and message passed on the Stream does not have to be reimplemented by the module and device driver writer as is the case for traditional character device I/O.

3.1.1 STREAMS System Calls

The STREAMS-related system calls are:

open(2s) | Open a STREAMS special file and create a new (or access an existing) Stream. |

close(2s) | Close a STREAMS special file and possibly cause the destruction of a Stream (i.e., on the last close of the Stream. |

read(2s) | Read data from an open Stream. |

write(2s) | Write data to an open Stream. |

ioctl(2s) | Control an open Stream. |

getmsg(2s),

getpmsg(2s) | Receive a (prioritized) message at the Stream head. |

putmsg(2s),

putpmsg(2s) | Send a (prioritized) message from the Stream head. |

poll(2s) | Receive notification when selected events occur on one or more Streams. |

pipe(2s) | Create a channel that provides a STREAMS-based bidirectional communication path between multiple processes. |

3.2 Stream Construction

STREAMS constructs a Stream as a double linked list of kernel data structures. Elements of the linked list are queue pairs that represent the instantiation of a Stream head, modules and drivers. Linear segments of link queue pairs can be connected to multiplexing drivers to form complex tree topologies. The branches of the tree are closest to the user level process and the roots of the tree are closest to the device driver.

The uppermost queue pair of a Stream represents the Stream head. The lowermost queue pair of a Stream represents the Stream end or device driver, pseudo-device driver, or another Stream head in the case of a STREAMS-based pipe.

The Stream head is responsible for conversion between a user level process using the system call interface and STREAMS messages passed up and down the Stream. The Stream head uses the same set of kernel routines available to module a driver writers to communicate with the Stream via the queue pair associated with the Stream head.

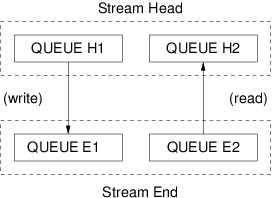

Figure 13 illustrates the queue pairs in the most basis of Streams: one consisting of a Stream head and a Stream end. Depicted are the upstream (read) and downstream (write) paths along the Stream. Of the uppermost queue pair illustrated, ‘H1’ is the upstream (read) half of the Stream head queue pair; ‘H2’, the downstream (write) half. Of the lowermost queue pair illustrated, ‘E2’ is the upstream half of the Stream end queue pair; ‘H1’ the downstream half.

Each queue specifies an entry point (that is, a procedure) that will be used to process messages arriving at the queue. The procedures for queues ‘H1’ and ‘H2’ process messages sent to (or that arrive at) the Stream head. These procedures are defines by the STREAMS subsystem and are responsible for the interface between STREAMS related system calls and the Stream. The procedures for queues ‘E1’ and ‘E2’ process messages at the Stream end. These procedures are defined by the device driver, pseudo-device driver, or Stream head at the Stream end (tail). In accordance with the procedures defined for each queue, messages are processed by the queue and typically passed from queue to queue along the linked list segment.